Automated Football Web Scraping Using Python

- gavlestrangebusine

- Jan 14, 2025

- 5 min read

Skills Used: Python - Pandas, Numpy, Requests, tkinter, json, ipywidgets, os, shutil - Excel, Data Cleaning

Full Project: https://github.com/LeFrenchy5/Football-WebScraping

Data Sources:

FBREF: A comprehensive source for football statistics and history ( https://fbref.com/en/comps/9/Premier-League-Stats)

Understat: A website dedicated to advanced football statistics ( https://understat.com)

Data Pipeline:

Web Scraping: Data is scraped from FBREF and Understat using custom python functions (User selected football teams)

Data Cleaning: The Scraped data from FBREF is cleaned then structured into csv files for further analysis, understat data is structured straight into csv files (No immediate cleaning needed)

Storage: The cleaned data is stored into respective folders for each team.

Python Coding Used:

The code is split into 3 separate Python files.

Fbref.py : Used to web scrape user selected team data from Fbref and clean the data before saving as a csv file.

Understat.py: Used to web scrape shots data for the user selected team from Understat.

Main.py: Combines the functions from both the previous files to gather data for the selected team. Includes getting the user to select their choice of teams.

Function:

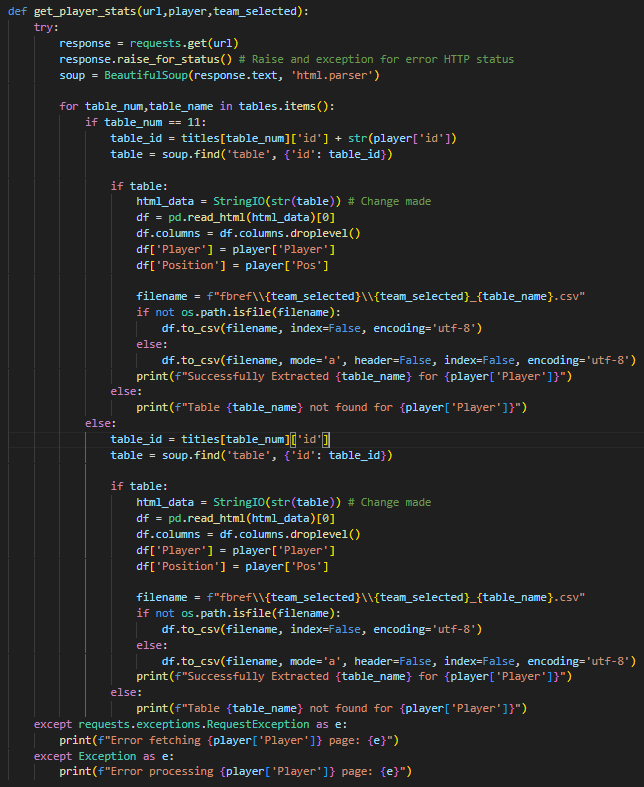

get_player_statsPurpose

The Function is designed to fetch and process player statistics from a given Fbref URL. This function effectively web scrapes data, processes it into a readable format, and stores it for further analysis.

Key Features

HTTP Requests and Parsing: Utilizes the requests library to make an HTTP get request to the provided URL and the BeautifulSoup library to parse the html content of the response.

Table Iteration: Iterates over a dictionary of tables, extracting relevant data based on specific conditions.

Data Extraction: For each table, extracts the HTML data, reads it into a pandas dataframe, and processes the columns to include player information for analysis.

File Handling: Checks if csv files for the specific table already exists. If not, it creates a new file; otherwise, it appends the data to the existing file.

Error Handling: Includes error handling for HTTP request failures and general exceptions, printing relevant error messages.

Code Example

Relevance

This function demonstrates essential web scraping, data processing, and file handling skills, which are valuable for data analysis and automation projects. It highlights my ability to work with libraries like requests, beautifulsoup, and pandas. Showcases my proficiency in handling and processing web data effectively.

Function:

clean_csvclean_last_5Purpose

The clean_csv function is designed to clean the csv files from Fbref, dropping unnecessary columns, filtering rows based on specific conditions in the season column. The clean_last_5 function is designed to drop unnecessary columns, as well as splitting the result column for easier analysis.

Key Features

Read csv: Utilizes the pandas library to read the csv file.

Drop Columns: Removes unnecessary columns from the dataframe.

Filter Rows: Ensures that the 'Season' column follows the desired format ( eg. '2019 - 2020')

Modify Columns: Splits the 'Result' column into 'Result' (W, D, L) and 'Score' (eg. 3-3) columns

Save Cleaned Data: Saves the processed data back to the original csv file.

Code Example

Relevance

These functions demonstrate my ability to preprocess data, a crucial step in data analysis and machine learning projects. By ensuring data integrity and consistency, deriving meaning insights and building reliable models is easier.

Function:

get_player_shotsPurpose

The functions is designed to scrape shot data for players from the user selected team from understat.com and compile it into a team shots dataframe, processes it and stores it for further analysis.

Key Features:

Player Iteration: Iterates over the player dictionary, which contains player names and their corresponding ID's, to contruct URL's with the base url and fetch the webpage content.

HTML Parsing: Uses beautifulsoup to parse HTML content of the response and extracts relevant script tags containing shot data.

Data Extraction & Json Processing: Extracts and decodes the Json data from the script tags, converts the Json string into a python dictionary, and normalizes it into a dataframe.

Storage: Saves the dataframe to a csv file named after the selected team, to be used for further analysis.

Code example

Relevance

This function showcases advanced web scraping, data extraction, and processing skills using libraries such as requests, beautifulsoup, and pandas. It highlights my ability to work with JSON data and perform data normalization.

Main.py:

Purpose

Main.py serves as the central script that integrates the functionalities of the Fbref.py and understat.py. It provides an interface for the user to select the league and football team, fetches relevant data, and initiates the web scraping and data cleaning processes.

Key Features:

User Interface: Utilizes the Tkinter library to create a graphical user interface for the user to select a league and team from a set dropdown menu.

Event Handling: Incorporates event handlers to update team options, fetch team links, and enables the completion button bases on the user interactions.

Data Integration: Combines the functions from Fbref.py and understat.py to complete the web scraping and data cleaning for the user to use for further analysis.

Code Example

Relevance

This script demonstrates my ability to create a user-friendly interface for data selection and integrates multiple components to streamline the data collection and processing workflow. It highlights my proficiency with Tkinter for GUI development, pandas for data manipulation, and the seamless integration of web scraping functions from other python scripts.

Examples of Analysis:

Analysis of team or individual performances over current or past seasons.

Comparison of player statistics across teams/leagues.

Visualization of teams/players shot map per season ( or all time)

Future Work:

Incorporate advanced analytics and visualizations for user selected teams.

Automate the update process to keep data up to date for analysis.

Extend the project to include more leagues and teams.

Extend the project to include more statistics and websites.

Improvements:

Fix issue with individual 'Player club summary' table on FBREF.

Make the table mappings more efficient.

Utilize Tkinter & windows to make the project more user friendly & advanced

Conclusion:

This project demonstrates the powerful combination of web scraping, data cleaning, and data analysis techniques. By utilizing the capabilities of python and multiple libraries, I've developed a system for gathering and processing football statistics from multiple sources, for a user selected team. This system enables the user to be able to do detailed analysis and visualization on football data.

Through this project, I have demonstrated my proficiency in:

Web Scraping: Efficiently extracting data from web pages using custom functions and libraries like requests and beautifulsoup.

Data Cleaning: Ensuring the integrity and usability of data through thoughtful preprocessing steps.

Data Integration and Storage: Seamlessly combining data from different source and storing it in structured formats for analysis.

User Interface Development: Creating user-friendly interfaces with Tkinter for smooth data selection and interaction.

I am excited about the potential future enhancements to this project, including more advanced analytics, expanded data sources, and enhanced user interfaces. I look forward to leveraging these skills in future projects and collaborations.

For the complete code and detailed implementation, please visit the full project repository on GitHub.

Full Project: https://github.com/LeFrenchy5/Football-WebScraping

Comments